What is Crawl Rate?

<< Sitemaps

Robots.txt >>

<< Sitemaps

Robots.txt >>

What is Crawl Rate?

It refers to the number of crawl requests made per second through search engine’s automated robots.

These automated robots are known as the Bots, Spiders or Crawlers.

Their purpose is to discover and scan the pages by following links from one website to another.

For example: Googlebot for Google, Bingbot for Bing, Slurp bot for Yahoo.

Once the bots go through your pages, they store this information in massive databases to be recalled when a related search query is made.

There are many factors that decide the order in which web pages are crawled, like number of pages linking to them, number of visitors, etc.

What is Crawl Budget?

It is the number of pages a crawler can crawl and index on a website in the given timeframe.

Google has an algorithm that decides the crawl rate for each website.

For example, Google can crawl just five pages on one website but 5,000 on some other.

It is determined by many factors like the size of your site, popularity, authority, number of backlinks, etc.

Why Does Crawl Rate Matter?

The crawl rate is essential for SEO. If you create high-quality content that doesn’t get appropriately crawled, there is no point in doing so much hard work.

Google should adequately index your website to compete for organic ranking on SERPs. Indexing is only possible when Googlebot adequately crawls your site.

For big websites, like e-commerce with thousands of pages, it can be challenging to crawl them all at once. But if crawlers keep visiting your website, all your pages can get crawled fast.

If you have added new pages to your website and have an excellent crawl rate, they will get crawled and indexed soon.

As websites keep updating and changing their content, you should have a high crawl rate for these changes to be properly tracked and indexed.

Having known this, every site owner needs to have a fast crawl rate. To achieve this, you have to make your site easy to crawl.

When you have a high crawl rate, you have a high chance of getting into ranking too. It is evident that if your website is not crawled correctly, it won’t rank.

But remember! Google doesn’t index everything it crawls and doesn’t show everything it indexes. So, crawling can not be a direct ranking factor.

Factors Determining Crawl Rate:

Website’s Response:

Crawlers are always like a fast responding website. But if you have a slow website or have server-related errors, the limit of bots to crawl the site goes down. Also, they would not want to recrawl such sites frequently.

Google states that not only does it improve the crawl budget, but it also improves user experience.

Updating Website:

Googlebot loves to crawl fresh content. If you add new content, update and make changes in old content, it will crawl regularly. A widespread example can be a news publishing site. It gets updated almost every minute.

Google can determine how often a particular page on your site is updated and decide to visit them more than other pages.

Domain Authority:

Popular websites are always a top pick. Google wants to prioritize the sites that have proven to be value-creating and driven traffic over a while. It will help them deliver better results to users.

Backlinks:

Links are the paths that bots follow to reach from one website to another. By creating five backlinks, you have added five more opportunities for crawlers to visit your site.

Also, giving links to other websites is like giving your vote to them. It will indicate to Google that it is a trusted website in its niche, making it an excellent choice to be crawled.

Sections Of The Website:

Google decides to crawl on the host level. For example: if you are e-commerce in one section and blogging in another, make sure both the sections respond equally fast.

Otherwise, if one is difficult to crawl, the crawl rate of both the sections will go down.

How To Check Crawl Rate?

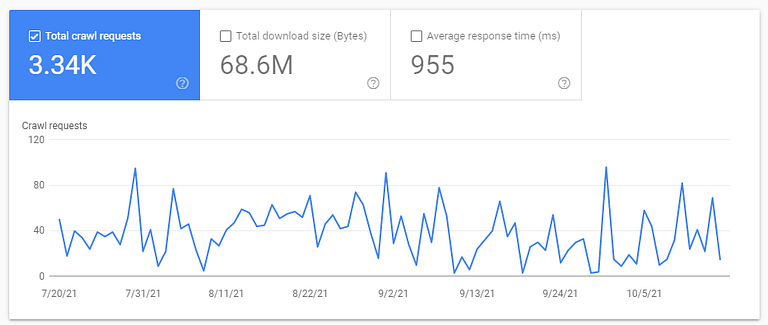

Use the Google Search Console tool to check the data on your crawl rate. It shows the regular Googlebot activity on your site.

It includes the number of crawl requests made, server response, availability issues, etc.

Based on this data, you can improve your site.

Go to the GSC tool > settings > crawl stats.

It will give you in-depth data on crawl stats for the past 90 days.

While crawling is essential, if the crawlers visit your page too often, it can result in over-crawling. It will add more load on server capacity and cost. The server’s price goes high, and the capacity is reduced.

To fix this:

- You can check this data from crawl stats. Go to the Crawl Rate Settings page and file a special request.

- Use robots.txt file. This file tells the crawlers the URLs that can be accessed on the site.

How To Increase Crawl Rate?

Better internal linking of pages:

As mentioned earlier, pages that have more links (internal and external) have more chances of getting crawled.

The better your internal linking, the more are the chances of your pages get crawled.

Flat website architecture:

A well-structured website makes it easy for crawlers to navigate through it.

Ideally, a home page should link to category pages and a further link to the product pages.

The product/service page should be no more than three clicks away from the home page.

Avoid orphan pages:

A page that does not have any links from any website page is known as the Orphan Page. Therefore, they can not be found by crawling the internal links.

It makes it difficult for search engine bots to discover them.

You need to add their URLs to the XML sitemap or link them with other related pages to crawl such pages.

Do not use duplicate content:

If a single page is accessible from more than one domain, Google will consider that as duplicate versions of the same page.

In this case, Google will choose one URL that has better content and crawl that. All other URLs will be crawled less frequently.

Do not use stale content:

As mentioned earlier, sites with updated content have more chances to get crawled. Not only will it impact your crawling rate, but it will hamper user experience too!

Site speed:

If your pages get loaded fast, Googlebot will have a chance to go through more URLs. But if you have a slow website, most of the time will be eaten up by a page just getting loaded.

Fewer redirects:

You waste a part of your budget every time a crawler is redirected.

Try to keep them as little as possible and ensure you don’t have more than two redirects in a chain. It can lead to bots dropping off your site midway.

Previous

<< Sitemaps

Next

Robots.txt >>